JFRCalifornia

Keeper of San Juan Secrets

Now that we’ve got BOR's September 24-month study in, we have a pretty clear picture ahead of where the water levels are going to be next spring. Fall and winter inflows are always much more predictable than spring and summer, and BOR is not likely to change their outflow regime between now and spring. What this all adds up to is that we’ll almost certainly see the lake drop to about 3516, which is even lower than it dropped in April 2023, when it bottomed out at 3519. It wasn’t the end of the world then, and it won’t be this time either. But it is getting to the point where power generation in the winter and spring of 2027 is going to become an issue if we don’t have a wet winter this year. Let’s see where this goes.

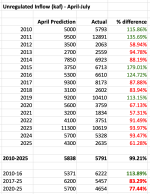

It's also very interesting to consider how much the forecast changed for the worse in just the four months since April 2025, a time when we had a pretty clear picture of the snowpack and runoff potential. At that time, the “most probable” 24-month study forecast predicted that April-July unregulated inflow would be 4.3 maf, a pretty sizable if not spectacular spring/summer inflow. But now we see it turned out to be only 2.5 maf, or less than 60% of what they thought would be the case. It was such an outlier outcome that it was much less than even the “minimum probable” prediction in April, which forecast 3.1 maf. The “minimum probable” is the level at which 90% of the modeled outcomes would exceed, so this was a true outlier result, something that even the models could not come close to predicting.

It all begs the question: why did this happen?

I’m not going to speculate on an answer, since I don’t have the tools or the data to develop a credible hypothesis, but clearly, some of the assumptions inherent in the existing model with respect to converting snowpack to runoff are either no longer valid, or at least wildly offbase for this year. Seems like there’s a little more work to be done at NOAA and BOR to recalibrate...

To some extent, BOR buffered the full force of the impact of poor spring runoff by releasing more from the upper reservoirs to Lake Powell than originally planned for the summer. But that was a stopgap measure, and at the end of the day, we’re still looking at a likely drop of about 45 feet from the June 2025 summer peak to the bottom next spring. That’s a big drop.

For those who see things as “glass half full,” there are upsides to this, assuming you can still launch your boat somewhere on the lake. There are places that will be visible that are normally not, reemerging canyons to hike in, all that. And it will certainly prompt a little more urgency among the states to resolve the issues related to future water use under the Compact, because if they don’t, nature will undoubtedly resolve it for them…

But if you’re looking for a low water upside, it’s finding more places like this…

It's also very interesting to consider how much the forecast changed for the worse in just the four months since April 2025, a time when we had a pretty clear picture of the snowpack and runoff potential. At that time, the “most probable” 24-month study forecast predicted that April-July unregulated inflow would be 4.3 maf, a pretty sizable if not spectacular spring/summer inflow. But now we see it turned out to be only 2.5 maf, or less than 60% of what they thought would be the case. It was such an outlier outcome that it was much less than even the “minimum probable” prediction in April, which forecast 3.1 maf. The “minimum probable” is the level at which 90% of the modeled outcomes would exceed, so this was a true outlier result, something that even the models could not come close to predicting.

It all begs the question: why did this happen?

I’m not going to speculate on an answer, since I don’t have the tools or the data to develop a credible hypothesis, but clearly, some of the assumptions inherent in the existing model with respect to converting snowpack to runoff are either no longer valid, or at least wildly offbase for this year. Seems like there’s a little more work to be done at NOAA and BOR to recalibrate...

To some extent, BOR buffered the full force of the impact of poor spring runoff by releasing more from the upper reservoirs to Lake Powell than originally planned for the summer. But that was a stopgap measure, and at the end of the day, we’re still looking at a likely drop of about 45 feet from the June 2025 summer peak to the bottom next spring. That’s a big drop.

For those who see things as “glass half full,” there are upsides to this, assuming you can still launch your boat somewhere on the lake. There are places that will be visible that are normally not, reemerging canyons to hike in, all that. And it will certainly prompt a little more urgency among the states to resolve the issues related to future water use under the Compact, because if they don’t, nature will undoubtedly resolve it for them…

But if you’re looking for a low water upside, it’s finding more places like this…

Last edited: